Anyone in technology will know the buzz caused by ChatGPT since its launch, and beyond fooling schoolteachers with human-like essays and passing law exams we’ve seen many interesting real-world use cases already. Of course, the point isn’t that it’s just an interesting plaything - generative AI using large language models are powerful technology to integrate into apps, tools, and automated processes. The potential is almost limitless and entirely new categories of digital experience are opened up. As we all know, Microsoft were quick to identify this and their $11 billion investment in GPT creator OpenAI means cutting-edge AI is becoming integrated into their products and services.

So we know that Teams, SharePoint, Viva, Dynamics etc. will evolve quite quickly and with features like Power Apps Ideas and Power Automate AI Copilot, Microsoft are starting to include GPT-3 and Codex capabilities into the maker experience within Power Platform. However, alongside that we want to build GPT into *our* apps and solutions! In this post we’ll explore how to integrate GPT-3 into what you build with the Power Platform – this is significant because being able to call into GPT capabilities from Power Apps, Power Automate, Power Virtual Agent etc. can be hugely powerful. I won’t go into use cases too much here, but perhaps you want to generate content for new products in development or automate contact centre responses. Maybe you want to use it as a classic chatbot able to answer questions or be a digital assistant of some kind. Or maybe you want to analyse, summarise, translate, or classify some content. In this post I’m going to keep us focused on how to implement rather than usage, but the limit might just your imagination.

The (not particularly) bad news – some implementation work is required, there’s no magic switch in the Power Platform

The good news – it’s as simple as deploying some services in Azure and creating a Power Platform custom connector (which you, or someone in your organisation, may have done already)

- The ability to create an Azure OpenAI instance – note that this requires an application and is currently only available for managed customers and partners. Microsoft assess your use case in line with their responsible AI commitment

- The ability to create and use a custom connector in the Power Platform

- A paid Power Platform license which allows you to use a custom connector (e.g. Power Apps/Power Automate per user or per app plan)

Overall here’s what the process looks like:

If you have experience of connectors in the Power Platform things are reasonably straightforward, though there are some snags in the process. This article presents a step-by-step process as well as some things that will hopefully accelerate you in getting started.

Azure OpenAI – the service that makes it happen

In my approach I’m using Azure OpenAI rather than the API hosted by the OpenAI organisation, because this is the way I expect the vast vast majority of businesses to tap into the capabilities. For calling GPT or other OpenAI models to be workable in the real world, it can’t just be another service on the internet. Azure OpenAI is essentially Microsoft-hosted GPT – providing all the security, compliance, trust, scalability, reliability, and responsible AI governance that a mature organisation would look for. Unsurprisingly, the OpenAI service folds under Azure Cognitive Services within Azure, and this means that working with the service and integrating it into solutions is familiar. Microsoft are the exclusive cloud provider behind OpenAI’s models, meaning you won’t find them in AWS, GCP or another cloud provider.

Link – form to apply for OpenAI: https://aka.ms/oai/access

Once you’ve received notification that your application for Azure OpenAI has been approved, you’re ready to start building.

Step 1 – create your Azure OpenAI instance

Firstly, in the Azure portal navigate into the Azure subscription where your usage of OpenAI has been approved. Start the process by creating a new resource and searching for “Azure OpenAI”:

There’s only one pricing tier and currently you’ll be selecting from one of three regions where the OpenAI service is available:

- West Europe

- East US

- South Central US

Now finish off by clicking the ‘Create’ button:

Creation will now start:

Once complete, your OpenAI resource will be available. The main elements you’ll use are highlighted below:

Exploring the Azure OpenAI Studio

For the next steps you’ll use the link highlighted above to navigate into the Azure OpenAI Studio – this is the area where OpenAI models can be deployed for use. Like many other Azure AI capabilities, configuration isn't done in a normal blade in the Azure portal - instead there's a more complete experience in a sub-portal. Here’s what the Studio looks like:

Azure OpenAI Studio has a GPT-3 playground, similar to the openai.com site where you may have played with ChatGPT or the main playground. In the Azure OpenAI Studio the playground can be used once you have a model deployed (which we’ll get to in a second), and it’s exactly like OpenAI’s own GPT-3 playground with some ready-to-play examples and tuning options. In the image below I’m summarising some longer text using the text-davinci-003 model:

The playground gives you the ability to test various prompts and the AI-generated results, also giving you info on how many tokens were used in the operations. Pricing is consumption-based and will depend on how many tokens you use overall.

To give a further sense of what’s available in the Azure OpenAI Studio, here’s the navigation: To move forward with integrating GPT-3 into our apps, we need to deploy one of the AI models and make it available for use.

Step 2 – choose an OpenAI model and deploy it

Using the navigation above, go into the Models area. Here you’ll find all the OpenAI models available for selection, and for production use you’ll want to spend time in the OpenAI model documentation to establish the best model for your needs. Here’s a sample of the models you can choose from: Each model has a different blend of functional performance from the model for the cost and speed of operations. The Finding the right model area in the OpenAI documentation has the information you need and here’s a quick extract to give you a sense:

| Model (latest version) | Description | Training data |

|---|---|---|

| text-davinci-003 | Most capable GPT-3 model. Can do any task the other models can do, often with higher quality, longer output and better instruction-following. Also supports inserting completions within text. | Up to Jun 2021 |

| text-curie-001 | Very capable, but faster and lower cost than Davinci. | Up to Oct 2019 |

| text-babbage-001 | Capable of straightforward tasks, very fast, and lower cost. | Up to Oct 2019 |

| text-ada-001 | Capable of very simple tasks, usually the fastest model in the GPT-3 series, and lowest cost. | Up to Oct 2019 |

Text-davinci-003 is the model most of us have been exposed to the most as we’ve been playing with GPT-3, and as noted above it’s the most powerful. It’s worth noting that if your use case relates to GPT’s code capabilities (e.g. debugging, finding errors, translating from natural language to code etc.) then you’ll need one of the Codex models such as code-davinci-002 or code-cushman-001. Again, more details in the documentation. Most likely you just want to get started experimenting, so we’ll deploy an instance of the text-davinci-003 model.

To move forward with this step, go into the Deployments area in the navigation:

In the dlalog which appears, select the text-davinci-003 model (or another you choose) and give it a name – I’m simply giving mine a prefix:

Hit the ‘Create’ button and model creates instantly: The model is now ready for use – you can test this in the playground, ensuring your deployment is selected in the dropdown. The next step is to start work on making it available in the Power Platform.

Step 3.1 – create the custom connector in the Power Platform

Background:

Azure OpenAI provides a REST API, and in this step we’ll create the custom connector to the API endpoint of your Azure OpenAI service and a connection instance to use. From the REST APIs provided, it’s the Completions API which is the one we’ll use – this is the primary service to provide responses to a given prompt. A call to this endpoint looks like this:

POST https://{your-resource-name}.openai.azure.com/openai/deployments/{deployment-id}/completions?api-version={api-version}In a JSON body, you’ll pass parameters for the prompt (i.e. your question/request) and various optional tokens to instruct GPT on how to operate, including max_tokens and temperature to use. All this is documented and the good news is you can stay simple and use defaults.

You can authenticate to the API using either AAD (which you should do for production use) or with an API key. In my example below I’m using the API key to keep things simple, and this approach is fine as you get started. A header named “api-key” is expected and you pass one of the keys from your Azure OpenAI endpoint in this parameter.

Process:

If you’ve ever created a Power Platform connector before you’ll know there are various approaches to this – the API docs link to Swagger definitions on Github, however these will not work for the Power Platform because they’re in OpenAPI 3.0 format and the Power Platform currently needs OpenAPI 2.0. To work around this I created my connector from scratch and used the Swagger editor to craft the method calls to match the API docs. To save you some of this hassle you can copy/paste my Swagger definition below, and that should simplify the process substantially.

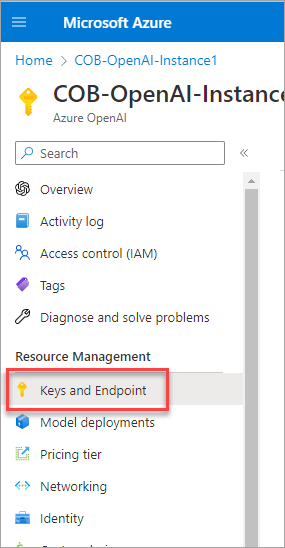

Before we get started, you’re going to need the details of your Azure OpenAI endpoint, so collect those now and store them safely (remembering that your API key is a sensitive credential). To do this, go back into the main Azure portal (not the OpenAI Studio) and head into the ‘Keys and Endpoint’ area:

Once there, use the screen to collect one of your keys and the endpoint URI:

Once you have those details, we’re ready to create the connector in the Power Platform. To do this, go to https://make.powerautomate.com/ and ensure you’re in the Power Platform environment to use.

From there, go to the Custom connectors area:

Hit the ‘New custom connector’ button and select ‘from blank’:

Give your connector a name:

We now start defining the connector – you can define each individual step if you like but you should find it easier to import from my definition below. Before that step, you can add a custom logo if you like in the ‘General’ area – this will make the connector easier to identify in Power Automate.

The next step is to download the Swagger definition and save it locally. If you choose to use my Swagger definition, you can get it from the embed below (NOTE: if you're reading on a phone this will not be shown!). Copy the definition and save it locally:

Make the following changes to the file and save:

- Replace ‘[YOUR ENDPOINT PREFIX HERE] in line 6 with your prefix – the final line should be something like cob-openai-instance1.openai.azure.com

- Replace ‘[YOUR DEPLOYMENT ID HERE]’ with your AI model deployment name from the Azure OpenAI Studio – in the steps above, my model name was cob-textdavinci-003 for example

The editor UI will now show on the left - paste your amended Swagger definition in here. The image below shows my host (prefixed ‘COB’) but you should see your equivalent:

Once this is done, simply hit the ‘Create connector’ button: Your custom connector has now been created using the details from the Swagger definition.

Step 3.2 – create connection instance

Once the custom connector exists we create a connection from it – if you haven’t done this before, this is where credentials are specified. In the Power Automate portal, if you’re not there already go back into the Data > Custom connectors area and find the custom connector we created in the previous step – when you find it click the plus icon (+):

Paste in the API key previously retrieved for your Azure endpoint and hit ‘Create connection’:

The connection is now created and should appear in your list:

We’re now ready to test calling GPT from a Flow or Power App – in the next step we’ll use a Flow.

Step 4 – test calling GPT-3 from a Flow

Follow the normal process in Power Automate to create a Flow, deciding which flavour is most appropriate for your test. As a quick initial test you may want to simply create a manually-triggered Flow. Once in there, use the normal approach to add a new step:

In the add step, choose the ‘Custom’ area and find the connection created in the previous step:

If you used my YAML, the connector definition will give you a Flow action which exposes the things you might need to vary with some elements auto-populated – remember to make sure your model deployment ID is specified in the first parameter (it will be if you overwrote mine in the earlier step):

Let’s now add some details for the call – choose a prompt that you like (red box below) and specify the number of tokens and temperature you’d like the model to use (green box below):

If you now run the Flow, you should get a successful response with the generated text returned in the body:

If we expand this, here’s what our call to GPT-3 created in full for these parameters:

Power Platform Governance is an important component of any organization’s digital transformation effort that allows customers to stay in control of the data, applications, and processes. It is an integrated system of policies, standards, and procedures that ensure the correct use and management of technology. This governance framework provides organizations the assurance, confidence, and trust that their technology investments are secure, optimized, and compliant with the industry standards.

Power Platform Governance consists of three main components: Governance Policies, Governance Model, and Governance Reporting. Governance Policies are the foundation of the framework and define the standards, procedures, and rules that organizations must adhere to. The Governance Model is a set of tools, processes, and best practices that organizations use to implement and enforce the governance policies. Lastly, Governance Reporting is the process of monitoring, analyzing, and reporting on the adherence to the governance policies.

Overall, Power Platform Governance is an important component of any digital transformation effort, as it helps organizations to stay in control of their investments in technology, making sure that it is secure, optimized and compliant with the industry standards. It consists of three main components: governance policies, governance model, and governance reporting. This framework helps organizations to create a reliable and secure environment for their technology investments, ensuring that they are in compliance with the industry standards.

SUCCESS! You now have the full power of GPT-3 and other OpenAI models at your disposal in the Power Platform.

I recommend playing around with the max_tokens and temperature parameters to see different length responses and greater/lesser experimentation in the generation. Again, spend time in the OpenAI documentation to gain more understanding of the effect of these parameters.

Summary

Being able to call into powerful generative AI from the Power Platform provides a huge range of possibilities - imagine integrating GPT-3 capabilities into your apps and processes. You can automate responses to queries, generate content, or even translate, summarise, classify or moderate something coming into a process. With the Codex model, scenarios around code generation and checking are also open. Most organisations invested in Microsoft tech will want to use the Azure-hosted version of the OpenAI models, and this is the Azure OpenAI service as part of Azure Cognitive Services. The service requires an application to be approved before you can access it, but it's as simple as completing a form in most cases. The process outlined above allows you to connect to the service through a Power Platform custom connector, and this can be shared across your organisation and/or controlled as you need.