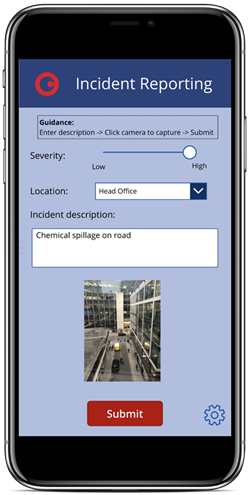

In the last article we talked about some examples of using AI in Office 365, and looked in detail at the the idea of building an incident reporting app which combines common Office 365 building blocks with AI. Whilst Power Apps and SharePoint underpin our solution, we use AI to triage the incident by understanding what is happening in the image. Is this a serious emergency? Are there casualties or emergency services involved? Our image processing AI can interpret the picture, and add tags and a description to where the file is stored in Office 365 - this can drive some automated actions to be taken, such as alerting particular teams or having a human review the incident. We also looked at the results of feeding in a series of images to the AI to analyze the results of different scenarios.

Overall, this article is part one of a series:

- AI in Office 365 apps - a scenario, some AI examples and a sample Power App

- AI in Office 365 apps - choosing between Power Apps AI Builder, Azure Cognitive Services and Power Automate (this article)

- AI in Office 365 apps - pricing and conclusions

Choosing between Power Apps AI Builder, Azure Cognitive Services and Power Automate

As mentioned in the last article, there are a number of ways we could build this app:- Use of Power Apps AI Builder

- A Power App which talks directly to Azure Cognitive Services (via a Custom Connector)

- A Power App which uses a Power Automate Flow to consume AI services

Option 1 - Power Apps AI Builder

AI Builder is still in preview at the time of writing (February 2020 - release will be April 2020) with four models offered:How the app would be built

In the case of our scenario, we said that we wanted the images to be tagged in SharePoint - and here's where we run into a consideration with AI Builder:

Option 2 - Azure Cognitive Services

Another way of bringing AI into an app is to plug directly into Azure Cognitive Services. As you might expect, this as a developer-centric approach which is more low-level - we're not in the Power Platform or other low-code framework here. The big advantage is that there's a wider array of capabilities to use. Compared to the other approaches discussed here, we're not restricted to whatever Microsoft have integrated into the Power Platform. The high-level areas of Cognitive Services currently extend to:- Decision - detect anomalies, do content moderation etc.

- Language - services such as LUIS, text analytics (e.g. sentiment analysis, extract key phrases and entities), translation between 60+ languages

- Speech - convert between text and speech (both directions), real-time speech translation from audio, speaker recognition etc.

- Vision - Computer Vision (e.g. tag and describe images, recognize objects, celebrities, landmarks, brands, perform OCR, generate thumbnails etc.), form data extraction, ink/handwriting processing, video indexing, face recognition and more

- NOTE - this is the service that's relevant to the scenario in this article, in particular the Computer Vision API's ability to tag and describe images)

- Web search - Bing autosuggest, Bin entity/image/news/visual/video search and more

How the app would be built

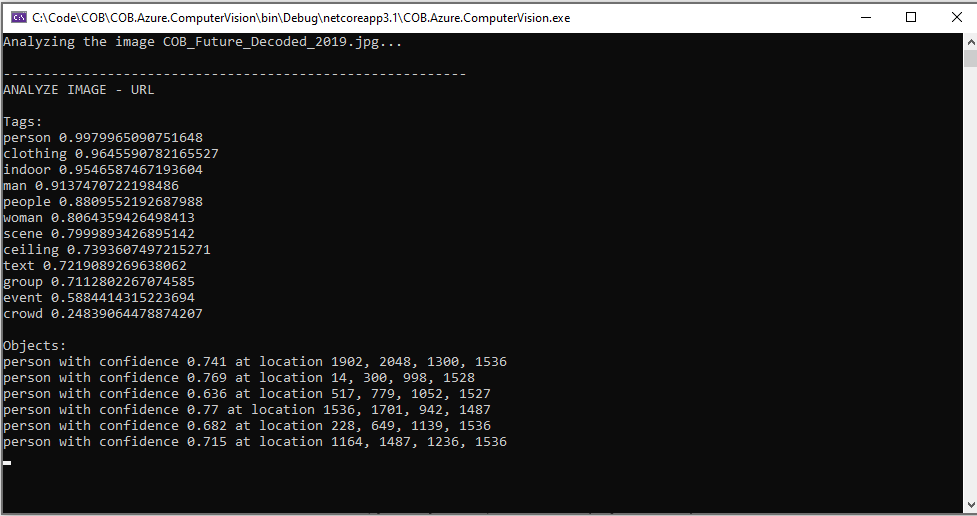

If I can write some code to consume the Computer Vision API and send the above image to it, I get a response that looks like this (notice the tags such as "person", "indoor", "ceiling", "event", "crowd" and so on:Code sample:

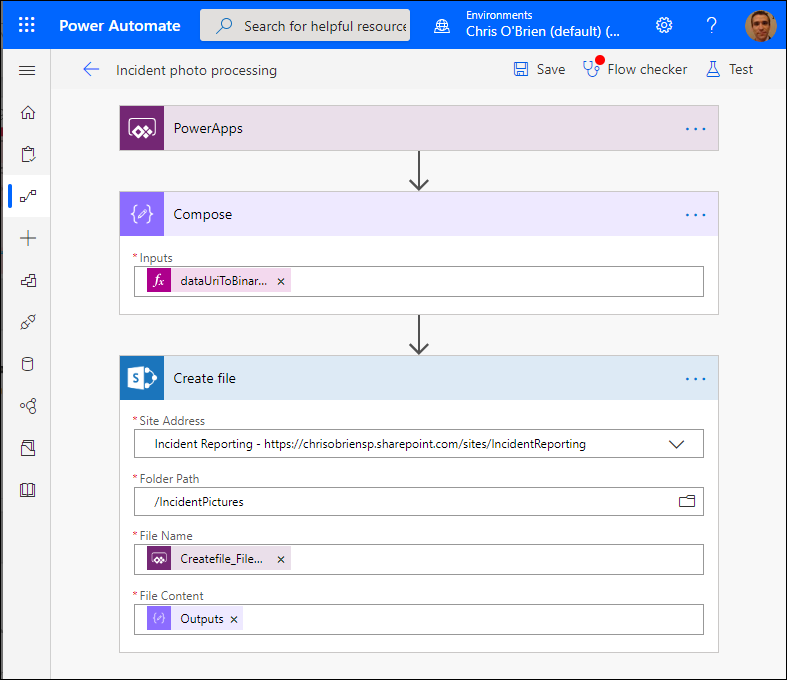

Option 3 - Using Power Automate (Flow) to consume AI

The final option presented here is to create a Flow which will do the work of tagging and describing the incident report images. This is by far the easiest way I think, and is perhaps an overlooked approach for building AI into your apps - I recommend it highly, Power Automate is your friend. Note, however, that these are premium Flow actions - we'll cover licensing and pricing more in the next post, but for now understand that bringing AI capabilities this way does incur additional cost (as it does with the other two approaches).In the scenario of our Power App for incident reporting, the simplest implementation is probably this:

- Power App uploads image to SharePoint document library

- Flow runs using the "SharePoint - when a file is created in a folder" trigger

- The Flow calls Azure Cognitive Services (using the native Flow actions for this)

- Once the tags and image descriptions have been obtained, they are written back to the file in SharePoint as metadata

Here's what my end-to-end Flow looks like:

- Describe Image Content

- Tag Image

IncidentPhotoProcessing.Run(PictureFilename, First(Photos).Url);

..and there we go, a fairly quick and easy way to get the photo for my incident into SharePoint so that the AI can do it's processing.

Summary

We've looked at three possible approaches in this post to building an Office 365 application which uses AI - Power Apps AI Builder, use of Azure Cognitive Services from code and use of actions in Power Automate which relate to AI. The findings can be summarized as:

- Different skills are needed for each approach:-

- Power Automate is the simplest to use because it provides actions which plug into AI easily - just build a Flow which can receive the image, and then use the Computer Vision actions shown above

- Direct use of Azure Cognitive Services APIs requires coding skills (either use provided SDKs for .NET and JavaScript etc. or make your own REST requests to the Azure endpoints), but is a powerful approach since the full set of Microsoft AI capabilities are exposed

- Capabilities are different across the options:-

- Power Apps AI Builder has some constraints regarding our image processing scenario. The "object detection" model is great for identifying if a known object is present in the image, but can't help with identifying any arbitrary objects or concepts in the image

- Azure Cognitive Services underpins all of the AI capabilities in Office 365, and offers many services not exposed in Power Apps or Power Automate. As a result, it offers the most flexibility and power, at the cost of more effort and different skills to implement

- Requirements and context are important:-

- In our scenario we're talking about a Power App which captures incident data and stores it in SharePoint - in other words, we've already specified that the front-end should be Power Apps. In this context, integrating Azure Cognitive Services directly would be a bit more challenging than the other two approaches (but is relatively simple from a coded application). In Power Apps, we'd need a custom connector to bring the code in (probably in the form of an Azure Function), and that's certainly more complex than staying purely in the Power Platform

- In the design process, other requirements could lead to one technical approach being much more appropriate than another. As another example, if the app needed a rich user interface that was difficult to build in Power Apps, the front-end may well be custom code. At this point, there's an argument for saying that using code for the content services back-end of the application also makes sense too