Microsoft 365 has become the centre of the digital universe for many organisations, and it makes sense to explore the integration of other platforms and data sources so that the employee experience is simpler and less fragmented. A great example is search - perhaps most of the organisation's documents are now in Microsoft 365, but when technical knowledge base articles are stored in ServiceNow, client data in Salesforce and there's still a file share of archived (but still useful) content, the net result can be a lot of context switching and time lost to searching. No wonder those McKinsey and IDC research papers suggest an average knowledge worker spends 20-30% of their time simply looking for information.

The benefits of providing a consolidated search experience often increase as each additional location is integrated - great things can happen when data in a certain system is made more accessible or put in the line of sight of new audiences. The sales and marketing teams might spend all day in CRM, but when client data is put in front of other groups in the company that can help others make better decisions too. In Microsoft 365, the Graph Connectors framework makes this possible - so that's what we're looking at here.

- Bring external data into Microsoft 365 using Graph Connectors (this article)

- Microsoft 365 Graph Connectors - creating custom search verticals and result types

For us here at Content+Cloud, a good example of a valuable content source is ServiceNow - it's the platform that powers the Managed Services Provider side of our business, providing core ITSM capabilities around problem/incident/change management as well as various forms of automation, AI and analytics which help us maintain a great service. Something that ServiceNow does quite well is knowledge base tooling - which isn't the easiest thing for me to say as a Microsoft person immersed in Viva Topics and SharePoint Syntex. But it's true - ServiceNow has all sorts of concepts like the Article Quality Index scoring mechanism, the ability to create knowledge from tasks easily and identify possible duplicates - capabilities that suit it's role in providing curated knowledge to support engineers where misinformation can be disastrous. We follow Microsoft and ServiceNow integration closely here at C+C, and there have been LOTS of recent developments - one example is a Microsoft Graph Connector for ServiceNow, and when this became available I knew it would be something useful for us.

Integrating external data with Microsoft Graph Connectors

At the time of writing Microsoft provide nine native connectors:

- Azure Data Lake Storage Gen2

- Azure DevOps

- Azure SQL and Microsoft SQL Server

- Enterprise websites

- MediaWiki

- File share

- Oracle SQL

- Salesforce (preview)

- ServiceNow

In addition, the Microsoft Graph connectors gallery provides a showcase of 3rd party connectors created by other vendors - usually these are paid products, but allow you to go beyond what's possible with Microsoft's connectors. Finally, it's possible to create your own Graph connector as illustrated by the Graph connector GitHub sample.

Improving search and discoverability through integration

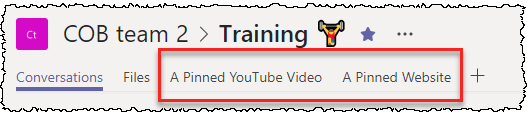

Being able to index content in other systems allows you to provide a consolidated experience, with the additional location appearing as a search vertical (tab) in the results. You can control how results are displayed, including changing the pieces of data shown and the formatting. Clicking the tab runs your search across this content source (i.e. ServiceNow in my case):

Configuring the link between Microsoft 365 and ServiceNow

Configuring an OAuth app registration in ServiceNow

In the case of ServiceNow, the first step is to create an OAuth endpoint in ServiceNow for your Graph connector to use. This is documented at ServiceNow Graph connector for Microsoft Search. In my case I'm using a ServiceNow PDI (Personal Developer Instance) and the authentication type I used is ServiceNow OAuth. Here's what my ServiceNow endpoint looks like:

In ServiceNow authZ/authN, a ServiceNow account is also required for the connection - this needs to have access to the knowledge role in the platform. The app registration and the identity are used together in authentication.

Once you have ServiceNow side in place, it's time to create the Graph connector in Microsoft 365.

Creating a Graph connector in Microsoft 365

You are then taken through a consent flow for that user:

Once the consent is granted the connection is tested by Microsoft 365 and the result shown:

The next few screens allow you to specify exactly how the ServiceNow data should be indexed. This process will be different depending on your data source, but for ServiceNow I can first pick the properties/fields to bring in and any additional filter I want to supply:

I allow 'Everyone' to be used in my case, since I'm effectively using a form of app authentication (ServiceNow OAuth + ServiceNow named user) rather than delegated auth with the user's identity which would support this.

Related to this, you can set which properties are Queryable, Searchable, Refinable and Retrievable within Microsoft search. This allows you to control which you might want to use as refiners/filters in search and which can be queried on - for example, you might want to query on a person name and have it return ServiceNow articles where he/she is the author.

It's now for Microsoft 365 to publish the connector and start indexing the external source

The result

Wrap-up

So that's the process and it's not too difficult - most of the effort is on the side of the external system since that's where you'll need to configure authentication. In the next post we'll look at controlling the appearance of search results with:- Custom search verticals

- Custom search result types